Online Safety Act: What went wrong

Or how regulators are continually messing up technology laws

The Online Safety Act recently rolled out in the UK and you’ll be very happy to hear it’s a raging dumpster fire.

But this newsletter isn’t about that per se. Instead, in this poorly-considered mire, there’s a kernel of truth, a little glowing nugget that reveals what’s wrong with much technology regulation.

Before we get to this little-discussed point, let’s cut up some context into bite-sized chunks and have a good old gobble.

So, the Online Safety Act 2023. Theoretically it’s a Pretty Good Thing. You know, it’s hard to feel overly negative about an initiative that tries to stop online content that’s illegal or harmful to kids.

Put it this way: a statement like “we should stop young kids watching porn” is so agreeable that only the nuttiest amongst us could even begin to disagree with it.

Even how the UK government is trying to get companies to comply makes sense, with businesses told to alter algorithms, remove harmful material, and verify user ages, or risk being fined.

Again, on paper, cool. It makes sense. Until we start thinking about the true test of any policy: implementation and enforcement.

Fundamentally, a law is only as good as the system’s ability to ensure people follow it.

For example, it’s easy to declare downloading Severance illegal, but unless you actually have the staff, resources, and legal bandwidth to catch and prosecute pirates, then the law’s not worth the ink it’s written in. It’s more guideline than requirement. People will watch copies of the show safe in the knowledge that nothing bad will happen to them.

The Online Safety Act makes this approach to media piracy look like the work of geniuses. Surely, this law’s implementation involved the UK government shitting the bed and then rolling around in that shit before topping it all off by taking a little leap into a river of shit.

This is for two main reasons.

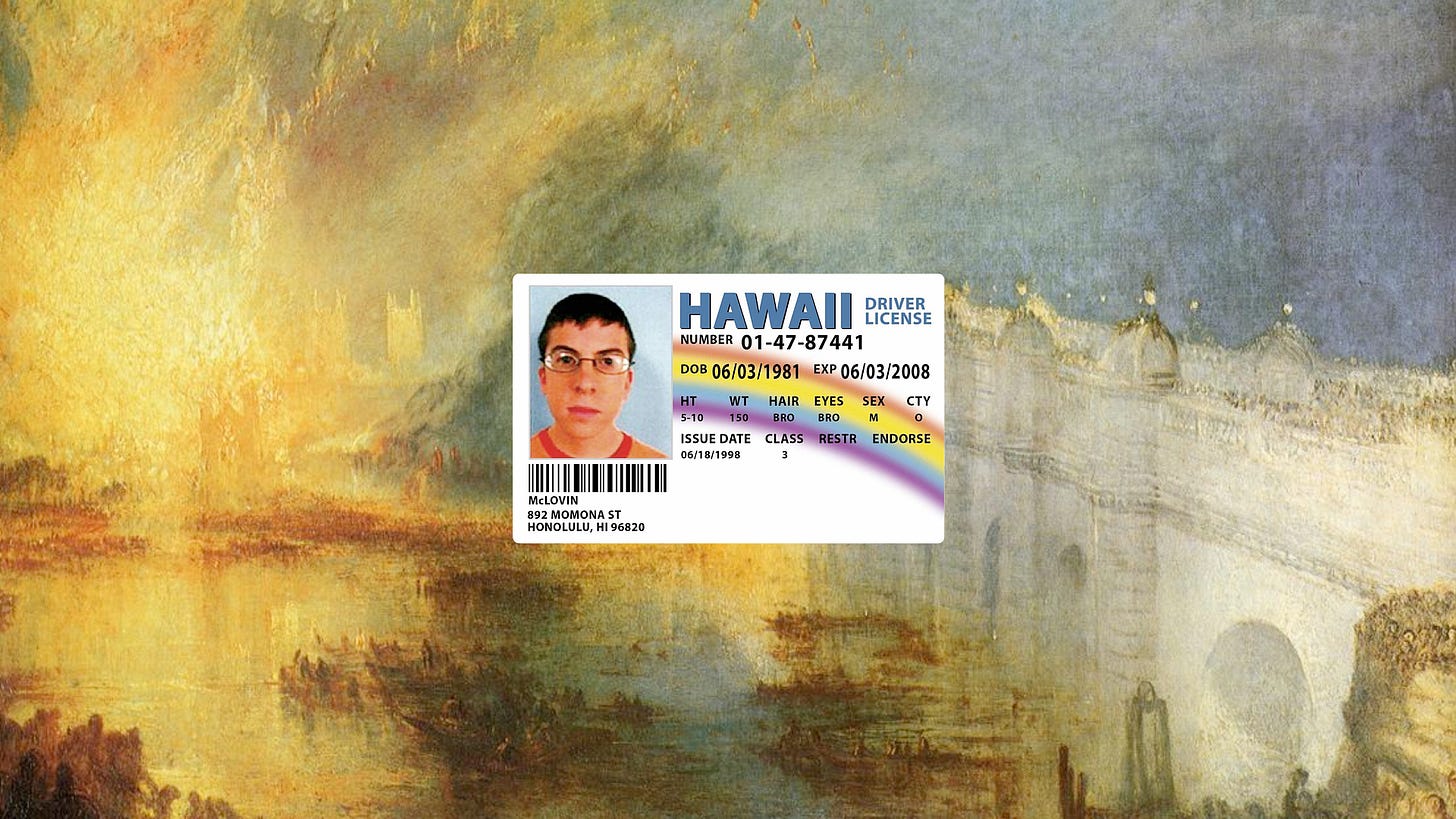

The first is security. To stop children accessing pornography, the burden of proof falls on adults. People need to prove they’re not children, meaning they have to enter their ID details on specific portals.

Currently, it’s estimated that around 6,000 different porn sites in the UK need age verification. But… where does this data go? What are the guidelines for how they store these identity documents? How are those checked and enforced?

You only need to look at how the Tea App had its databases “breached” to witness the damage leaked IDs can cause, and the UK government has just forced random companies to hold vast amounts of personal data with little oversight of how they do it.

It’s a privacy nightmare waiting to happen. Because we can trust porn sites to be responsible? Right? Right? RIGHT?

The second problem with the Online Safety Act is even more damning: it’s laughably easy to bypass.

All you need to do is download a VPN, set your location to another country, and wank to your heart’s content. These apps are already topping stores in the UK and you know that young people will quick to adapt to this new reality. Probably far more so than older folks.

What we have then is a series of regulations doing almost nothing to actually achieve their aims. The Online Safety Act is a poorly considered mess.

And this is where we circle back to the beginning of the piece. The mistake regulators and lawmakers commit when they try and deal with issues amplified by the online world is they view them as technical rather than cultural.

The Online Safety Act is the equivalent trying to solve knife crime by having someone come into your kitchen to check you’re of the right age to use one. Oh, and this person is also totally fooled by a child wearing a fake moustache.

In reality, this wouldn’t happen, because, generally, people understand that stabbings are a cultural issue, rather than a technical one. The issue is less the existence of knives and more the factors that drive people to use them aggressively.

This isn’t something that’s spread to discussions of technology.

I mean, there are already a raft of tools available to stop children accessing harmful content online. There are filters and protections and safeguards on almost every device on the market today. If children are constantly accessing harmful content, it’s because these settings haven’t been enabled by parents or guardians.

Instead of focusing on these pre-existing methods and trying to educate the public, the government focused on providing an overarching technical solution to what’s clearly a societal problem.

This, I believe, is due to regulators seeing the online world as a tool rather than another plane of reality.

Consider how the issue of drink driving was dealt with. When this ban first came in, fines and regulation didn’t stop drunk people getting behind the wheel. As proven in various research papers, advertising campaigns had a direct impact on reducing these incidents and changing prevailing public attitudes.

Drunk driving wasn’t an issue only with the roads or cars or regulations, it was one with attitudes and awareness to the danger of the issue — and this is true of children’s safety online too.

Ensuring big companies are held to account through laws and fines is important, but a wide-ranging ban that’s easily bypassed will achieve nothing if there’s not investment into ushering in a cultural shift.

Tools to protect children already exist, but these are at a micro level rather than a macro one. And that’s where the focus should be: spreading this knowledge and making it a cultural imperative to shield the young from the worst of the internet.

There are a gamut of other elements we could discuss about the Online Safety Act (is it actually the first on a statewide surveillance plan that will remove our ability to freely converse online???), but the point of this article is different: to highlight that many in power think about technology and the internet the wrong way.

Solving problems in the online world is no longer a technical issue, it’s something that requires the same nuance as tackling things in the “real” world. And until politicians recognise that, we’re going to get more nonsense like the Online Safety Act.

When you prioritize safety and security at the expense of liberty, you shouldn't be surprised when you find yourself trapped in a prison. It's called 'maximum security' for a reason.

Only the willfully ignorant are surprised by where we find ourselves. Those that live outside these 'secure' countries like the UK have only a very short time left to organize. Such control measures are globally coordinated for a very good reason.